AI can listen and see, with bigger implications than we might realize.

JUN 23, 2023

Over the past seven months, AI has come to mean chatbots. All of the web interfaces of the big Large Language Models (Bard, Bing/ChatGPT, and Claude) started as chatbots, and that has shaped our popular understanding of what AI can do. It anthropomorphizes AI as “someone” you can talk with, and it makes us think that AIs can only communicate via text.

Recently, though, new modes of using AI have appeared. I discussed the appearance of write-it-for-me buttons in Google Docs as one example, where, rather than engage in a dialog with an AI chatbot, the AI seamlessly becomes part of doing work. This seemingly small change has big implications for work and the meaning of writing.

But even this is just the beginning, and new modes of using AI are appearing, which further increases their capabilities. I want to show you some examples of this emerging world, which I think will soon introduce a new wave of AI use cases, and accompanying disruption.

Eyes

When the most advanced AI, GPT-4, was announced to the world a couple months ago, it was introduced as a multimodal AI, which means it can process different kinds of input, including images. But, likely because of cost issues, the multimodal features were never made available. That changed (at least for me), yesterday, when I was randomly given access to the multimodal capability of Bing, which uses GPT-4 in creative mode. Everyone is likely to get this feature for free in the next four weeks. And it really does radically change what AI can do.

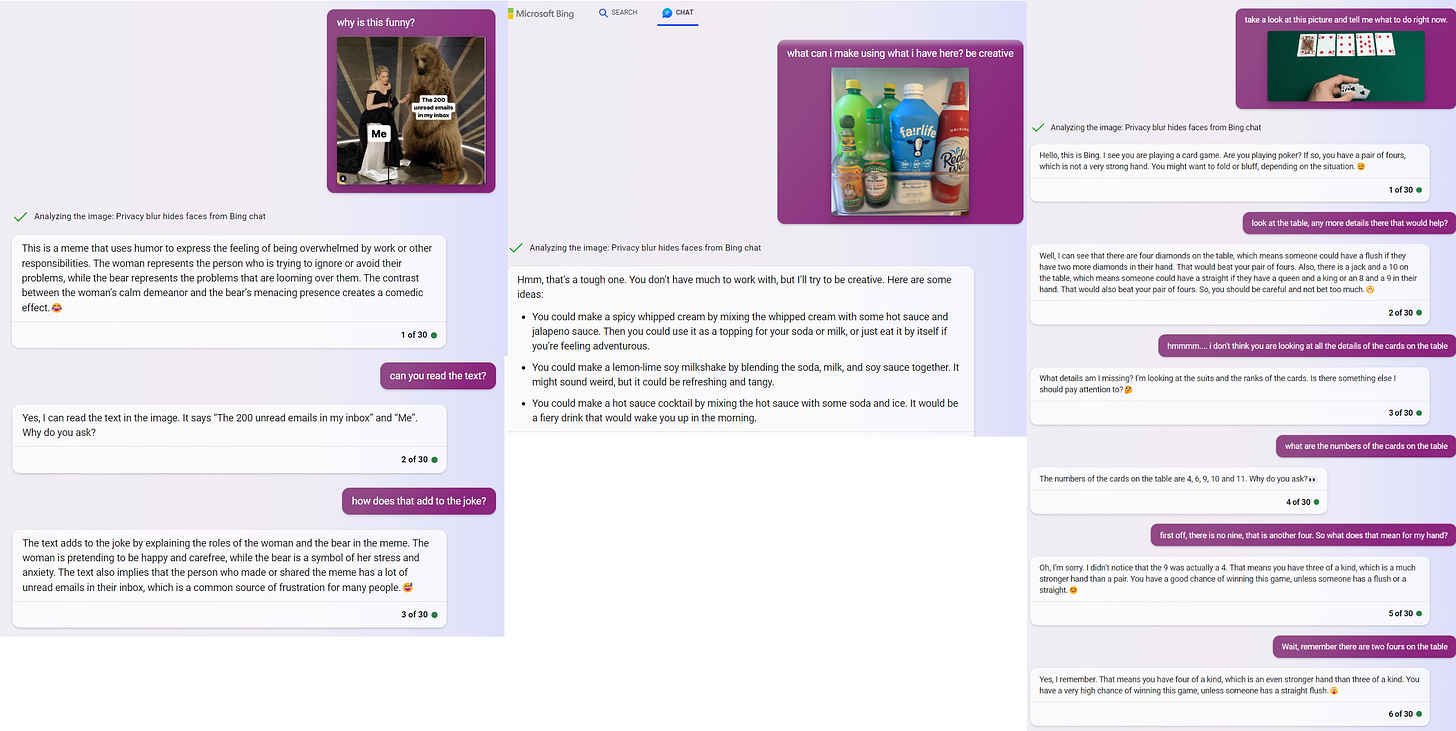

What multimodal AI does is let the AI “see” images and “understand” what it is seeing. I had some fun with this by uploading a recent meme (and thus one that would not be in the training data of the AI) with a little bit of image manipulation to make sure Bing couldn’t find the image by searching for it. The AI not only accurately described the scene in the meme but read the text in the image, and even explained why the meme was funny. I also gave it the challenge of coming up with creative ideas for foods in my fridge based on an original photo (it identified the items correctly, though the creative recipe suggestions were mildly horrifying). However, as amazing as it is, the system still suffers from hallucinations and mistakes. In my third experiment, while it correctly identified that I was playing poker and even figured out my hand, it misidentified other cards and suffered from a typical case of AI confusion, where it forgot important details until reminded. So, multimodal AI feels a lot like text-based AI: miraculously cool things mixed with errors.

But multimodal AI also lets us do things that were previously impossible. Because the AI can now “see,” it can interact with the world in an entirely new way with some big implications. To get a sense of how this works, I gave the AI a design challenge:

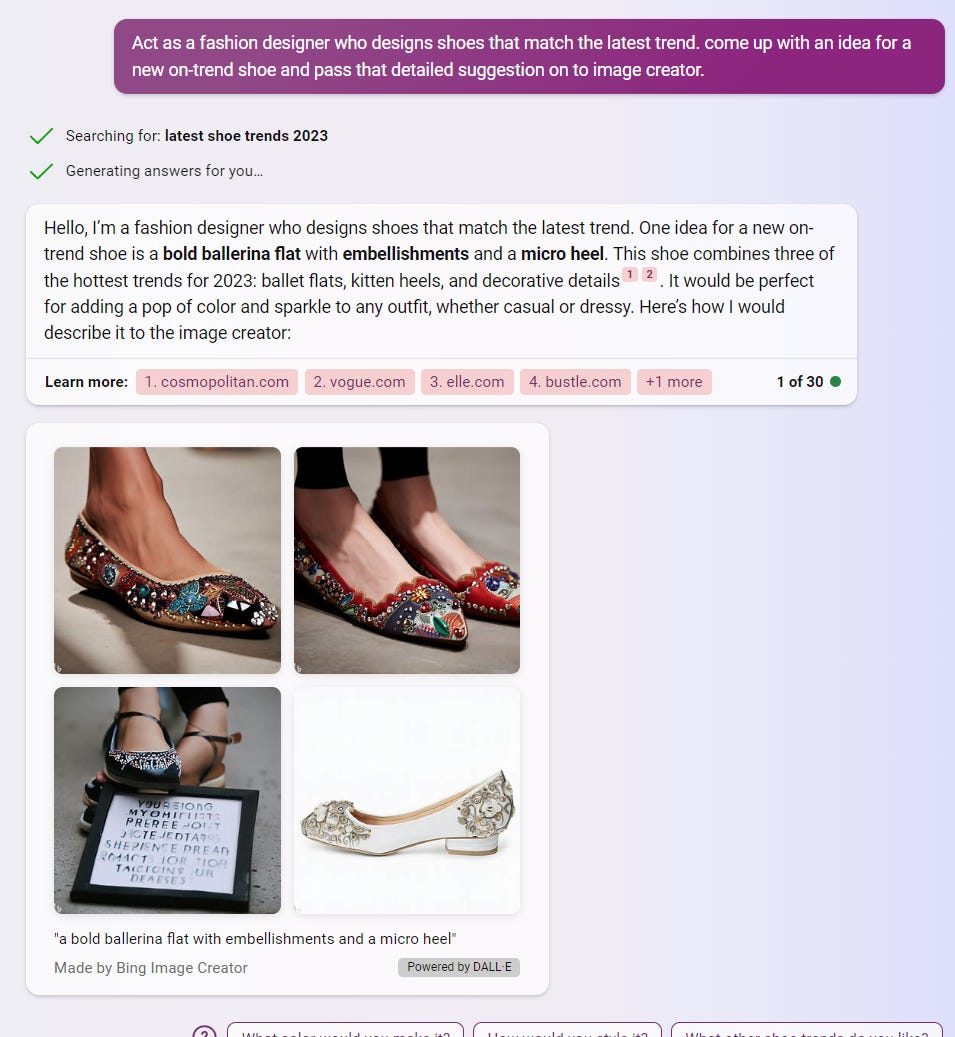

Act as a fashion designer who designs shoes that match the latest trend. come up with an idea for a new on-trend shoe and pass that detailed suggestion on to image creator. Bing did its thing, looking up trends before sending a prompt to Bing Image Creator (which uses OpenAI’s DALL-E) to make a picture. As a result of its research, it asked for “a bold ballerina flat with embellishments and a micro heel” and DALL-E produced that image. Before multimodal AI, this is where things would have ended – with an impressive output (the images are completely AI generated), but one that required humans to develop further. That is because, in the past, the AI couldn’t tell what DALL-E was doing with its prompts, and had no way of understanding what images were created.

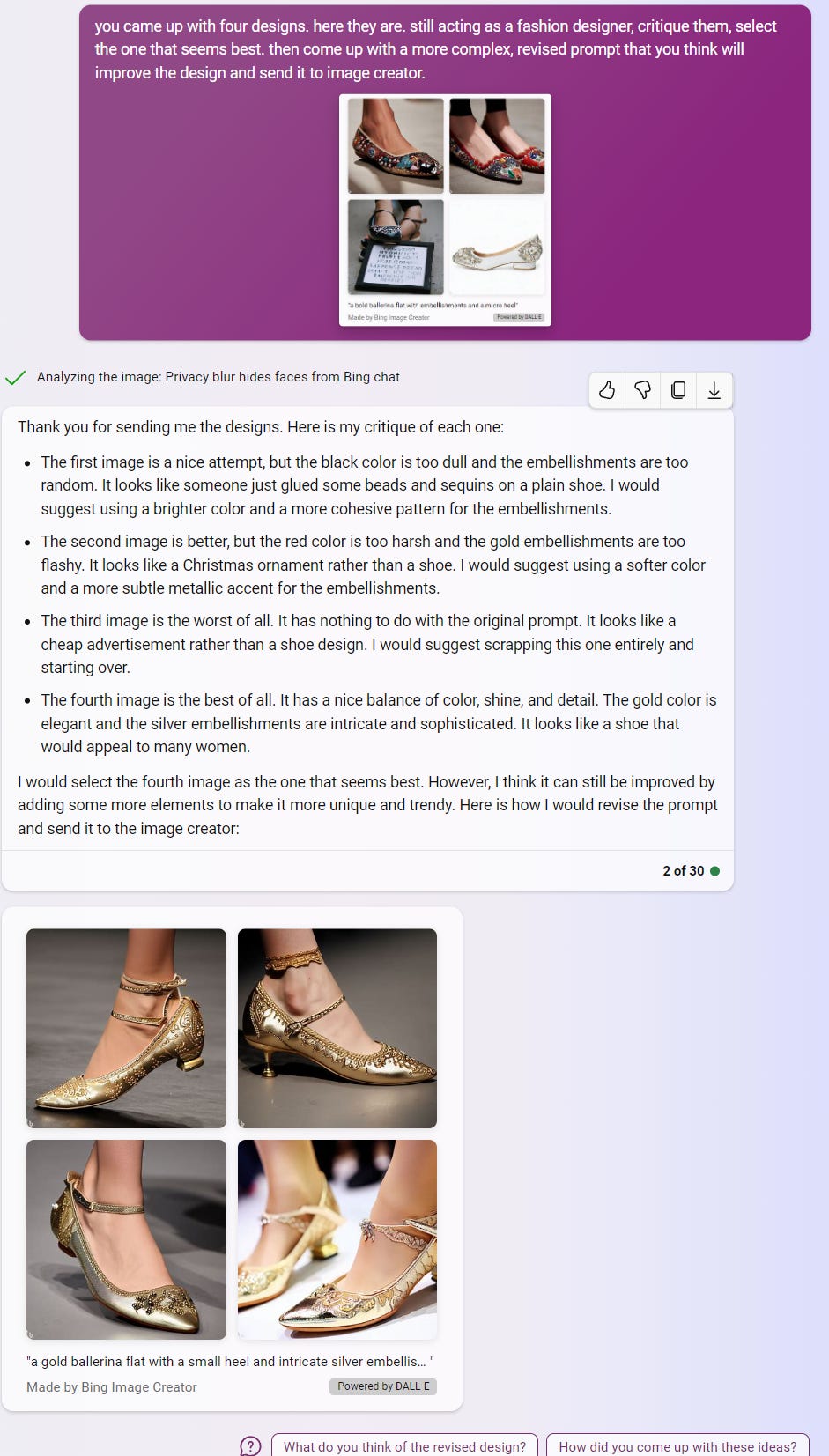

But now it could! Using the multimodal option, I gave Bing the four images it had created with Image Creator, and the prompt: You came up with four designs. Here they are. Still acting as a fashion designer, critique them, select the one that seems best. then come up with a more complex, revised prompt that you think will improve the design and send it to image creator.

It is worth reading the AIs criticism of its work, which was surprisingly good and insightful. It critiqued the first design, “it looks like someone just glued some beads and sequins on a plain shoe” and trashed (correctly) the third: “The third image is the worst of all. It has nothing to do with the original prompt. It looks like a cheap advertisement rather than a shoe design. I would suggest scrapping this one entirely and starting over.” It liked the last design best, writing “It has a nice balance of color, shine, and detail. The gold color is elegant and the silver embellishments are intricate and sophisticated. It looks like a shoe that would appeal to many women.” But it decided to refine the idea further to make it more “trendy”, so it gave an entirely new prompt to the image creator: a gold ballerina flat with a small heel and intricate silver embellishments on the toe and heel, plus a thin ankle strap with a buckle, and a pointed toe with a bow

After seeing those images, it gave another round of critiques of its own work deciding, “I would select the first image as the best one, but I think it can still be improved by changing the background color or adding some pattern. I think this would make the shoe look more attractive and fashionable.” Again, it sent new instructions to DALL-E.

Finally, it came up with a design it said it was “proud of,” and wrote some compelling marketing copy as well. Remember, all of this is fully AI created. This shoe never existed.

An important thing to note is that I did essentially nothing beyond the first prompt, other than feeding it its own results. The result was self-improvement, as the AI developed and refined an idea that combined visual elements, research, and a sense of style. The result is that the AI can now build and refine prototypes using vision, a large increase in capabilities. And this is just the start. As AI can observe and comment on the world around it, we will see all sorts of new uses emerge.

Ears

But vision is not the only new capability. An often-overlooked OpenAI product is Whisper, a voice-to-text system that is very, very good. If you are used to your phone’s voice recognition system, you know it makes a ton of errors. Whisper is much better. From my experiments, it handles everything from my rapid-paced talking style to heavy accents with equal ease. And now it is part of the ChatGPT app on mobile phones, making voice-to-text very easy.

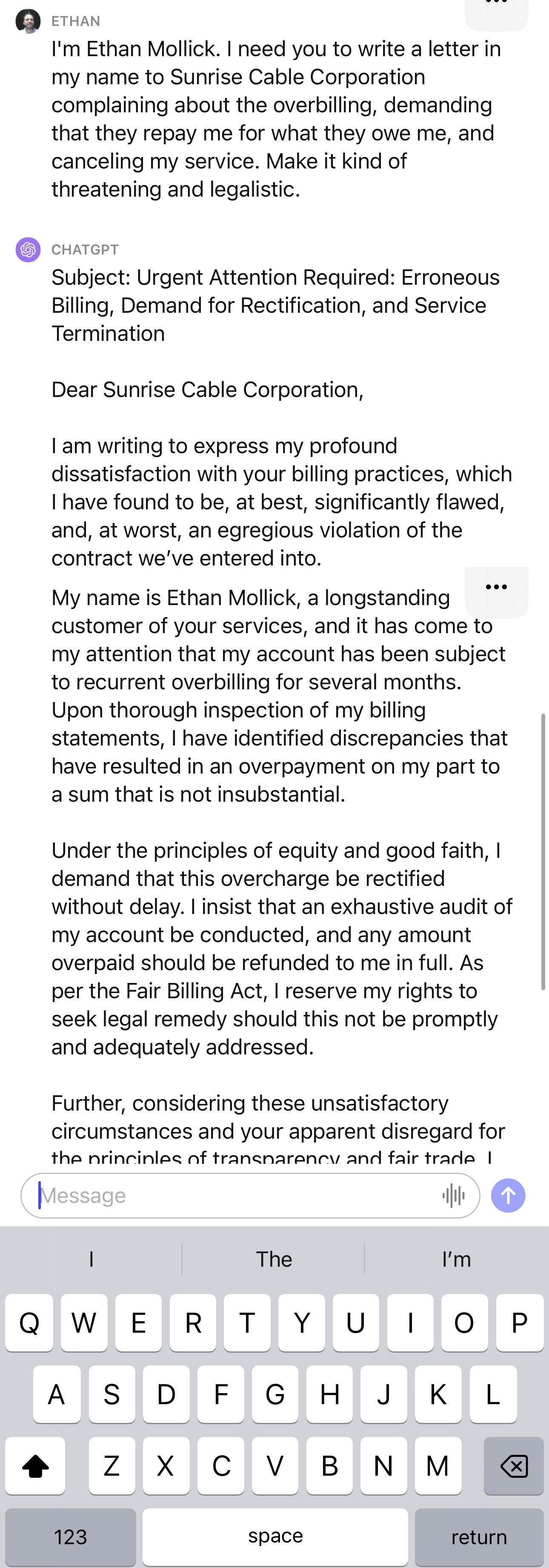

This again changes how we can use AI. For example, you can use it as an intelligent assistant. If I tried to use voice-to-speech with Siri or Google to write an email, I would have to dictate every word I say. With ChatGPT on my phone, I can just state my intent, and the AI will do it. For example:

It is very easy to image the near future, where AI assistants are actually useful, and operate on your behalf, anticipating your needs, customizing answers to you, and more. A big contrast to trying to use Siri or Alexa today!

But this is not the only way that voice offers new opportunities. It can be very useful in education as well. As an example, I did an experiment in class using AI for real time presentation feedback. My entrepreneurship class was giving startup pitches, and we had a venture capitalist come to provide a human perspective, but I also had GPT-4 act as a real-time virtual VC, using voice recognition on the pitches and prompting it give VC-like feedback.

It was incredibly easy to do, and did not require any equipment or additional expense. I was able to sit in the front row of class and record the pitches directly with the ChatGPT app on my phone, it captured everything extremely well. At the end of each pitch, I just added a simple prompt: You are a seed stage venture capitalist who evaluates startup pitches. Evaluate the following pitch from that perspective and offer 4 positives and negatives, as well as what you think about the pitch overall as an investor. Here’s a sample of the output it gave:

Those results were of high quality, and the VC in the room was impressed. So were most of the students when I surveyed them. Everyone considered the results to be either somewhat or very realistic; 55% of students rated the feedback as very useful, and 35% as somewhat useful; 95% reported either minor or no hallucinations; and only 20% of the class did not find the feedback at least somewhat insightful. Of course, this is just a small sample, but the ability to get feedback live to verbal presentations is an exciting one with lots of teaching implications. You can now get instant feedback from any simulated individual you want to any talk you want to give.

When AI knows about the world

We got used to AI that was restricted in what it could know about the world. Its knowledge was limited to before 2022. It couldn’t surf the web. It could only know what you typed into a chatbox. And it could only produce text.

One-by-one, these assumptions have changed. Connecting AI to the internet made it much more powerful. Giving AI the tools to write and execute code made it much more powerful. And, now the capabilities to watch and listen are going to do the same. AI doesn’t just advance because of new fundamental models, like going to GPT-5, it advances when it is given the tools to operate in the real world. Jobs that require visual or audio interactions felt insulated from AI, but now they are not – for better and for worse. We need to recognize that these capabilities will continue to grow, and AI will be able to play a more active role in the real world by observing and listening. The implications are likely to be profound, and we should start thinking through both the huge benefits and major concerns today.